Rate this article :

3.5/5 | 2 opinion

This article was useful to you ?

Yes

No

Vous avez noté 0 étoile(s)

Sommaire

Procédure

This is a file that tells the various search engines what they can and cannot index. Before indexing a website, search engines consult the robots.txt file.

The "robots.txt" file is located at the root of your website. In principle, this file is generated automatically when your website is created, but it is possible to modify it to suit your needs.

To activate the"robots.txt" file on your website, you first need to connect to your website administration.

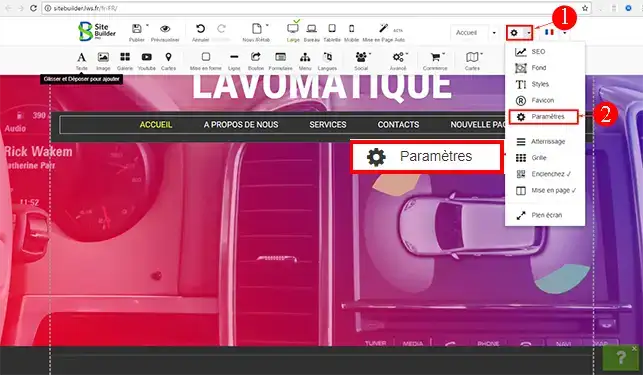

1. Click on the"Settings" tab in the SiteBuilder Pro toolbar. Then click on"Settings" to open the control panel.

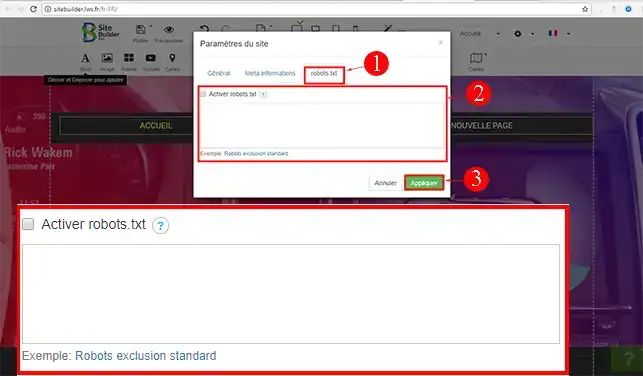

2. Go to the"Robots.txt" tab and tick the"Enable robots.txt" box.

By default, if you don't configure anything else in your"robots.txt" file, it will contain three lines of code, as follows:

You can modify the "robots.txt" file at any time. You may have specific needs to indicate to the various search engines. For example, if you want to prevent a page on your site from being indexed, you need to indicate the following in the "robots.txt" file

User-Agent: googlebot Disallow: /page.html Sitemap: http://votre-domaine.com/sitemap.xml

It is possible to exclude several pages from indexing. Just add as many Disallow lines as you want:

User-Agent: googlebot Disallow: /page.html Disallow: /page2.html Disallow: /page3.html Sitemap: http://votre-domaine.com/sitemap.xml

If you have a large"robots.txt" file, it is often useful to comment out your lines so that you can find your way around:

User-Agent: googlebot Disallow: /page1.html # test page of my website Disallow /tmp # contains temporary files

In order to address specific search engines, you will need to use the following configuration:

User-Agent: googlebot Disallow: /folder/ User-agent: Bingbot Disallow: /folder2/

It is often advisable, when you are in the middle of creating your website, toexclude indexing temporarily, while you finish setting up the various pages. To prevent your site from being indexed, you need to insert this code:

User-agent: * Disallow: /

You may want to block indexing of an entire folder except for a file that is important to you. To do this, you must first block indexing of the entire folder and then allow indexing of one or more files in the same folder.

User-agent: googlebot Disallow: /blog/ Allow: /blog/page.html

You are now able to activate and configure the"robots.txt" file on your site created with SiteBuilder Pro. The"robots.txt" file will tell the various search engines which pages of your site you want to index.

Don't hesitate to share your comments and questions!

Rate this article :

3.5/5 | 2 opinion

This article was useful to you ?

Yes

No